Authors:

(1) Wen Wang, Zhejiang University, Hangzhou, China and Equal Contribution (wwenxyz@zju.edu.cn);

(2) Canyu Zhao, Zhejiang University, Hangzhou, China and Equal Contribution (volcverse@zju.edu.cn);

(3) Hao Chen, Zhejiang University, Hangzhou, China (haochen.cad@zju.edu.cn);

(4) Zhekai Chen, Zhejiang University, Hangzhou, China (chenzhekai@zju.edu.cn);

(5) Kecheng Zheng, Zhejiang University, Hangzhou, China (zkechengzk@gmail.com);

(6) Chunhua Shen, Zhejiang University, Hangzhou, China (chunhuashen@zju.edu.cn).

Table of Links

2 RELATED WORK

2.1 Story Visualization

Story visualization aims to generate a series of visually consistent images from a story described in text. Limited by the generative capacity of the model, many story visualization approaches [Chen et al. 2022; Li 2022; Li et al. 2019; Maharana and Bansal 2021; Maharana et al. 2021, 2022; Pan et al. 2022; Rahman et al. 2022; Song et al. 2020] seek to largely simplify the task such that it becomes tractable, by considering specific characters, scenes, and image styles in a particular dataset. Early story visualization methods are mostly built upon GANs [Goodfellow et al. 2020]. For example, StoryGAN [Li et al. 2019] pioneers the story visualization task by proposing a GAN-based framework that considers both the full story and the current sentence for coherent image generation. CP-CSV [Song et al. 2020], DuCo-StoryGAN [Maharana et al. 2021], and VLC-StoryGAN [Li et al. 2019] follow the GAN-based framework, while improving the consistency of storytelling via better character-preserving or text understanding. Difference from these works, VP-CSV [Chen et al. 2022] leverages VQ-VAE a transformer-based language model for story visualization. StoryDALL-E [Maharana et al. 2022] leverages the pre-trained DALL-E [Ramesh et al. 2021] for better story visualization and proposes a novel task named story continuation that supports story visualization with a given initial image. AR-LDM [Pan et al. 2022] proposes a diffusion model-based method that generates story images in an autoregressive manner.

While progress has been made, these methods rely on storyspecific training on datasets like PororoSV [Li et al. 2019] and FlintstonesSV [Maharana and Bansal 2021], making it difficult to generalize these methods to varying characters and scenes.

The development of large-scale pre-trained text-to-image generative models [Ramesh et al. 2022, 2021; Rombach et al. 2022; Saharia et al. 2022] opens up new opportunities for generalizable story visualization. Several attempts have been made to generate storytelling images with diverse characters [Gong et al. 2023; Jeong et al. 2023; Liu et al. 2023c]. Jeong et al. [Jeong et al. 2023] utilized textual inversion [Gal et al. 2022] to swap human identities in story images, thus generalizing the characters in story visualization. However, the identity is not well preserved, and the method is limited to a single human character in storytelling. Intelligent Grimm [Liu et al. 2023c] proposes the task of open-ended visual storytelling. They collect a dataset of children’s storybooks and train an autoregressive generative model for story visualization. The limitation is clear: they focus on the storytelling of the children’s storybook style, and it needs to re-train the model to generalize to other styles, contents, etc., which is not scalable.

Probably the most similar work to ours is TaleCraft [Gong et al. 2023], which also proposes a systematic pipeline for story visualization. Note that, they require user-provided sketches for each character in each story image to obtain visually pleasing generations, which can be laborious to obtain. Moreover, all existing methods rely on multiple user-provided images for each character to obtain identity-coherent story visualizations. In contrast, our method allows for generating diverse and coherent story visualization results with only text descriptions as inputs.

2.2 Controllable Image Generation

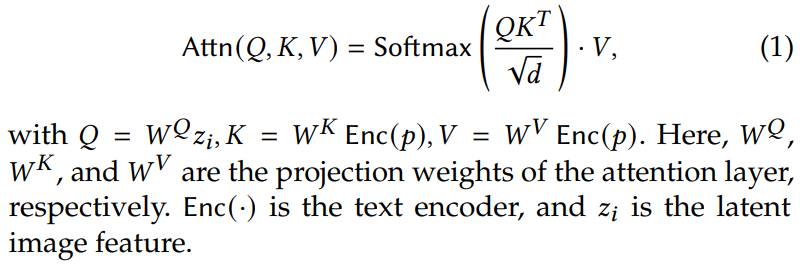

The scaling of text-image paired data [Schuhmann et al. 2022], computational resources, and model size have enabled unprecedented text-to-image (T2I) generation results [Ramesh et al. 2022, 2021; Rombach et al. 2022; Saharia et al. 2022]. Large-scale pre-trained text-to-image models, such as Stable Diffusion [Rombach et al. 2022], are capable of generating images from text, i.e., 𝐼 = DM (𝑝), where DM(·) is the pre-trained diffusion model and 𝑝 is the text prompt that describe the image 𝐼. In this process, the text information is passed into the image’s latent representation through cross-attention layers in the model. The attention [Vaswani et al. 2017] operation can be written as

However, limited by the language understanding capability of the text encoder and poor text-to-image content association [Chefer et al. 2023], T2I models, like Stable Diffusion [Rombach et al. 2022], can perform poorly in the generation of multiple characters and complex scenes [Chefer et al. 2023]. To alleviate this drawback, some approaches introduce explicit spatial guidance in T2I generative models. For example, ControlNet [Zhang and Agrawala 2023] uses zero convolution layers and a trainable copy of the original model weights, introducing reliable control in diffusion models. T2I-Adapter [Mou et al. 2023] achieves control ability by proposing the adapter that extracts guidance feature and adds it to the feature from the corresponding UNet encoder. GLIGEN [Li et al. 2023] injects a gated self-attention block into the UNet, enabling the model to make good use of the grounding inputs.

Inspired by the ability of large language models (LLMs) [et al 2023; OpenAI 2023] being able to understand and plan, recent works [Feng et al. 2023; Lian et al. 2023] employ LLM for layout generation. Specifically, LayoutGPT [Feng et al. 2023] achieves plausible results in 2D image layouts and even 3D indoor scene synthesis by applying in-context learning on LLMs. LLM-grounded Diffusion [Lian et al. 2023] proposes a two-stage process based on the LLM-generated layout and local prompts. Specifically, it first generates the local objects within each bounding box based on the corresponding local prompt, and then re-generates the final result based on the inversed latent of local objects. While effective, LLMgrounded Diffusion requires careful hyper-parameter tuning for the trade-off between structural guidance and inter-object relationship modeling. Moreover, it is difficult for the users to control the detailed structure of the generated objects. In contrast, we use the intuitive sketch or keypoint to guide the final image generation. Thus, we can not only achieve high-quality story image generation, but also allow interactive story visualization by simply tuning the generated sketch or keypoint conditions.

2.3 Customized Image Generation

Story visualization requires that the identities of characters and scenes in a story remain consistent across different images. Customized image generation can meet this requirement to a large extent. Early methods [Gal et al. 2022; Ruiz et al. 2022] focus on the customized generation of a single object. For example, DreamBooth [Ruiz et al. 2022] fine tunes the pre-trained T2I diffusion model under a class-specific prior-preservation loss. Textual Inversion [Gal et al. 2022] enables customized generation by inverting subject image content into text embeddings. Unlike these approaches, Custom Diffusion [Kumari et al. 2022] further achieves multi-subject customization by combining the multiple customization weights through closed-form constrained optimization. Cones [Liu et al. 2023a] finds that a small cluster of concept neurons in the diffusion model corresponds to a single subject, and thus achieves customized generation of multiple objects by combining these concept neurons. Cones2 [Liu et al. 2023f] further achieves more effective multi-object customization by combining text embedding of different concepts with simple layout control. Differently, Mix-of-Show [Gu et al. 2023] proposes gradient fusion to effectively combine multiple customized LoRA [Hu et al. 2022] weights and performs multi-object customization with the aid of the T2I-Adapter’s dense controls.

While significant progress has been made, existing methods perform poorly on one-shot customization. The training data for subject-driven generation has to be identity-consistent and diverse. As a result, existing story visualization methods require multiple user-provided images for each character. To tackle this issue, we propose a training-free consistency modeling method, and leverage the 3D prior in 3D-aware generative models [Liu et al. 2023d,b] to obtain multi-view consistent character images for customized generation, thus eliminating the reliance on human labor to collect or draw character images.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.